BERT Unveiled: Revolutionizing Search Understanding in the Digital Era

In the dynamic realm of digital information retrieval, Google’s BERT algorithm stands as a groundbreaking force, reshaping the way search engines comprehend user queries. Titled “BERT Unveiled: Revolutionizing Search Understanding in the Digital Era,” this exploration delves into the transformative impact of BERT, an acronym for Bidirectional Encoder Representations from Transformers. Unveiled by Google in 2018, BERT represents a paradigm shift in natural language processing, allowing search engines to grasp the nuances of context and user intent. As we navigate through the intricacies of BERT’s architecture and its application, we unravel the layers of innovation that have propelled search engines into a new era of precision and relevance. This journey encompasses the intricate dance between language and machine, unlocking a deeper understanding of how BERT has elevated the user experience in an increasingly complex digital landscape.

Language Understanding Redefined: Google’s Motivation Behind BERT

“Language Understanding Redefined: Google’s Motivation Behind BERT” embarks on an exploration of the driving forces compelling Google to create the BERT algorithm. At its core, Google’s motivation behind BERT lies in the pursuit of refining language understanding within the realm of search. Recognizing the limitations of previous models in capturing the intricacies of human language, Google sought to address the need for more nuanced and context-aware comprehension. In a digital landscape teeming with diverse queries and user intents, the quest for relevance became paramount. BERT, with its bidirectional approach and transformer architecture, emerged as the solution to deciphering the complexities of language structure and semantics. This exploration delves into the motivations that spurred Google to redefine language understanding, ultimately illuminating the pivotal role BERT plays in elevating the accuracy and sophistication of search engine results.

BERT’s Linguistic Prowess: The Distinctive Features of Google’s Language Model

BERT’s Linguistic Prowess: The Distinctive Features of Google’s Language Model unravels the exceptional characteristics that distinguish BERT from its predecessors. What renders this new system truly special is its unparalleled ability to comprehend the subtleties of context and user intent within search queries. Unlike traditional language models, BERT employs a bidirectional approach, allowing it to consider the full context of a word by analyzing both its preceding and succeeding words. This bidirectional understanding proves instrumental in capturing the nuanced relationships between words, enhancing the model’s grasp of intricate language structures. Additionally, BERT leverages transformer architecture, facilitating the simultaneous processing of long-range dependencies and contextual information. This dual prowess empowers BERT to decipher the intricacies of natural language, enabling search engines to provide more accurate and contextually relevant results. In essence, BERT’s linguistic finesse lies in its capacity to navigate the rich tapestry of human language, making it a revolutionary force in the evolution of search engine algorithms.

Decoding Context: Understanding the Mechanics of the BERT Algorithm

- Bidirectional Contextualization: BERT stands for Bidirectional Encoder Representations from Transformers, indicating its unique ability to understand words in context by considering both their preceding and succeeding words.

- Transformer Architecture: BERT employs a transformer architecture, enabling it to process input data in parallel and capture long-range dependencies between words, facilitating a more comprehensive understanding of the context.

- Tokenization: The input text is tokenized into smaller units, allowing BERT to analyze the meaning of each token. Tokens can be words, subwords, or characters, providing flexibility in handling different linguistic nuances.

- Embedding Layers: BERT utilizes embedding layers to convert tokens into numerical vectors, representing the semantic meaning of words. This step ensures that the model can work with the input data in a format suitable for analysis.

- Attention Mechanism: BERT employs an attention mechanism that assigns different weights to each token based on its relevance to others in the sentence. This attention to context allows BERT to capture the relationships between words and their impact on overall meaning.

- Training on Contextual Tasks: BERT is pre-trained on large datasets using contextual tasks, such as predicting missing words in a sentence. This pre-training phase allows the model to learn the intricacies of language and context before being fine-tuned for specific applications.

- Fine-Tuning for Specific Tasks: After pre-training, BERT can be fine-tuned for various natural language processing tasks, such as question answering or sentiment analysis. This adaptability makes BERT a versatile tool for a wide range of applications.

- Contextualized Word Representations: BERT generates contextualized word representations, considering the entire context of a sentence. This enables the model to understand the nuanced meanings of words in different contexts, leading to more accurate and context-aware search results.

- Decoding User Intent: By comprehensively understanding the context of words, BERT excels at decoding user intent in search queries. This capability allows search engines to deliver results that align more closely with the user’s specific needs and queries.

Contextual Clarity: How BERT Enhances the User Search Experience

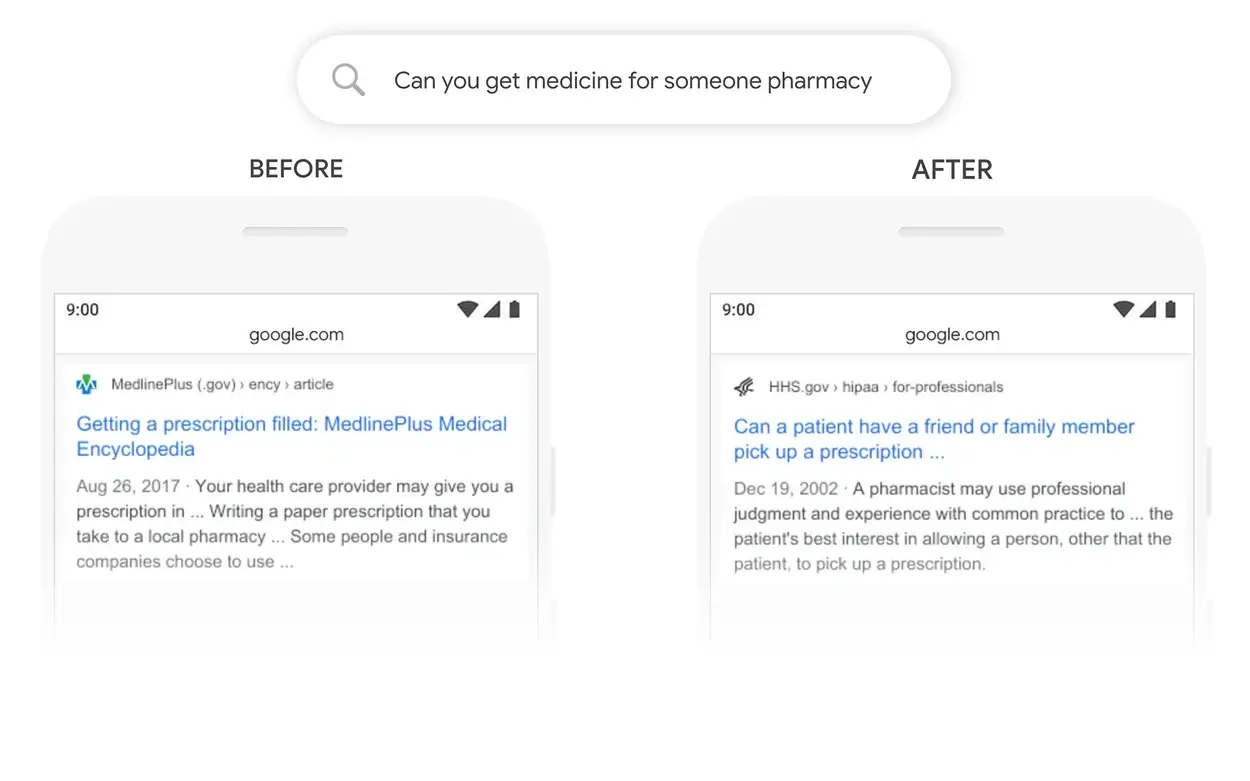

- Improved Understanding of User Intent: BERT’s contextual analysis enables search engines to better grasp the nuanced intent behind user queries, leading to more accurate interpretation of search intent.

- Enhanced Relevance in Search Results: Users experience a significant improvement in the relevance of search results, as BERT considers the context of each word in a query, delivering more precise and contextually appropriate information.

- Natural Language Understanding: BERT’s linguistic finesse allows it to understand and interpret natural language more effectively, making the user experience feel more conversational and intuitive.

- Addressing Long-Tail Queries: BERT is particularly effective in understanding and responding to long-tail queries, which often contain more conversational or specific language. This ensures that users receive relevant results even for less common or more detailed search queries.

- Reduced Ambiguity: By considering the context of words, BERT reduces ambiguity in search queries, helping search engines discern the intended meaning and providing results that align more closely with what users are seeking.

- Enhanced SERP Snippets: BERT contributes to the generation of more informative Search Engine Results Page (SERP) snippets. Users can get a better preview of the content on a page directly from the search results, aiding in quicker decision-making.

- Personalized User Experience: BERT’s ability to understand context contributes to a more personalized user experience, tailoring search results to individual preferences and ensuring that the information presented is more aligned with the user’s needs.

- Increased User Satisfaction: The overall effect of BERT on the user is an increased level of satisfaction, as the search experience becomes more intuitive, relevant, and closely aligned with the user’s language and intent.

- Support for Conversational Queries: BERT is adept at handling conversational language, allowing search engines to better understand and respond to queries in a more conversational tone, making the interaction between the user and the search engine feel more natural.

Content Precision: BERT’s Impact on Website Content Optimization

- Demand for Contextually Relevant Content: BERT’s focus on context places a premium on website operators producing content that is not only keyword-rich but also contextually relevant. This shift necessitates a deeper understanding of user intent to create content that resonates with search queries.

- Quality Over Quantity: BERT encourages website operators to prioritize content quality over sheer quantity. Instead of relying solely on keyword stuffing, the emphasis is on crafting well-structured, informative content that comprehensively addresses user needs.

- Long-Tail Keyword Optimization: With BERT’s proficiency in understanding long-tail queries, website operators benefit from optimizing content for more specific and detailed keywords. This approach ensures visibility in search results for users with niche or specific search intents.

- User-Centric Content Strategy: BERT underscores the importance of adopting a user-centric content strategy. Website operators are prompted to create content that genuinely serves the interests and queries of their target audience, fostering a more engaging and satisfying user experience.

- Adaptation to Natural Language Queries: BERT’s capability to comprehend natural language queries requires website operators to adapt their content to mirror the conversational tone of users. This alignment enhances the likelihood of content being surfaced for voice searches and other conversational interactions.

- Improved Click-Through Rates (CTR): The precision in understanding user intent brought about by BERT contributes to higher click-through rates. Websites with content that closely matches user queries are more likely to attract clicks, benefiting from increased visibility and user engagement.

- Focus on Informational Depth: BERT’s emphasis on context urges website operators to delve into the informational depth of topics. Content that provides in-depth insights, answers, and solutions stands a better chance of ranking higher in search results, establishing authority and credibility.

- Continuous Monitoring and Adaptation: Website operators need to adopt an agile approach to content creation and optimization. Regular monitoring of search trends, user behavior, and BERT updates allows for continuous adaptation to evolving search dynamics, ensuring sustained visibility.

- Enhanced User Experience Signals: BERT indirectly rewards websites that prioritize user experience. By delivering contextually relevant content, websites improve user satisfaction, reduce bounce rates, and potentially enhance other user experience signals that influence search rankings.

Contextual SEO Renaissance: Strategies for Success in the BERT Era

- Content Quality Over Keyword Density: BERT’s emphasis on context marks a shift from traditional SEO strategies centered around keyword density. Success in the BERT era hinges on producing high-quality content that addresses user intent and provides valuable information.

- Contextual Keyword Research: SEO strategies now require a more nuanced approach to keyword research. Understanding the context in which keywords are used becomes crucial, guiding SEO professionals to choose and optimize for terms that align with user intent.

- Long-Tail Keyword Optimization: BERT’s ability to understand long-tail queries encourages SEO practitioners to optimize for more specific and detailed keywords. This approach enhances visibility for niche queries, attracting users with precise search intents.

- User Intent Optimization: Aligning content with user intent is paramount in the BERT era. SEO strategies should focus on understanding the varied intents behind search queries and tailoring content to directly address the needs and questions of the target audience.

- Natural Language Processing (NLP) Integration: BERT’s proficiency in natural language processing prompts SEO professionals to integrate NLP techniques. This involves crafting content in a more conversational tone, aligning with how users naturally express themselves in search queries.

- Structured Data Markup for Context: Utilizing structured data markup becomes crucial for providing additional context to search engines. This helps search algorithms better understand the relationships and context within content, improving the chances of appearing in relevant search results.

- Mobile-First and Voice Search Optimization: BERT’s impact extends to mobile and voice search. SEO strategies must prioritize mobile optimization and cater to conversational queries to align with the growing trend of voice-based interactions and searches.

- User Experience Signals: BERT indirectly emphasizes user experience signals, influencing SEO rankings. Content that satisfies user intent and provides a positive experience, as measured by metrics like low bounce rates and longer dwell times, is likely to receive favorable SEO outcomes.

- Continuous Monitoring and Adaptation: SEO professionals need to stay vigilant and adapt strategies in response to evolving search dynamics and BERT updates. Continuous monitoring of search trends and user behavior allows for agile adjustments to maintain SEO success.

- Evolving Link Building Strategies: BERT’s impact extends to link building, requiring a shift towards earning high-quality, contextually relevant backlinks. SEO practitioners should focus on building links from authoritative sources that align with the thematic context of their content.

Adapting Language: SEO Strategies for Success in the BERT Landscape

- Contextual Keyword Research: Conduct comprehensive keyword research with a focus on understanding the context in which keywords are used. Prioritize long-tail keywords that capture specific user intents and align with the content’s thematic context.

- User Intent Analysis: Invest time in analyzing and understanding user intent behind search queries. Tailor content to directly address the needs, questions, and objectives that users are likely to have when entering specific search terms.

- Natural Language Integration: Adapt content creation strategies to incorporate natural language patterns. Craft content in a conversational tone, mirroring how users naturally express themselves in search queries, thereby enhancing the chances of aligning with BERT’s contextual understanding.

- Content Structure and Organization: Organize content in a structured manner, ensuring that it provides clear and comprehensive information. Use headings, subheadings, and bullet points to make content more digestible and help search engines understand the hierarchy and flow of information.

- Mobile Optimization: Prioritize mobile optimization, considering the growing importance of mobile devices in search. Ensure that websites are responsive, and content is easily accessible and navigable on various screen sizes.

- Voice Search Considerations: Optimize content for voice search by anticipating and addressing natural language queries. Create content that answers questions in a clear and concise manner, as voice searches tend to be more conversational and question-oriented.

- Structured Data Markup: Implement structured data markup to provide additional context to search engines. This can enhance the visibility of rich snippets in search results, offering users more information directly on the SERP.

- Quality Content Creation: Prioritize the creation of high-quality, informative, and engaging content. BERT rewards content that provides value to users, and search engines are more likely to rank such content higher in search results.

- Regular Content Audits: Conduct regular content audits to ensure that existing content aligns with BERT’s emphasis on context and user intent. Update and optimize older content to maintain its relevance and effectiveness in addressing user needs.

- User Experience Optimization: Focus on optimizing the overall user experience on the website. This includes improving site speed, ensuring intuitive navigation, and creating a seamless user journey to enhance user satisfaction and positively influence SEO.

- Adaptability to Algorithm Updates: Stay informed about BERT updates and other algorithm changes. Being adaptable to evolving search dynamics ensures that website operators can adjust their strategies in response to the latest developments in search engine algorithms.

Myth-Busting BERT: Clarifying Misconceptions About Language Understanding in Search

In the ever-evolving landscape of search engine algorithms, it’s essential to dispel common myths surrounding the BERT algorithm to gain a clear understanding of its impact. Contrary to some misconceptions, BERT does not penalize websites; rather, its primary focus is on improving the relevance and accuracy of search results. Another prevailing myth is that BERT solely relies on keywords, overlooking its nuanced ability to comprehend the context and intent behind those keywords. It’s important to recognize that BERT does not favor short content over long-form or vice versa; instead, it prioritizes content that best serves the user’s intent, regardless of its length. By debunking these myths, we can appreciate BERT’s role in enhancing language understanding in search, fostering a more accurate and user-centric online experience.

Conclusion

In conclusion, the advent of Google’s BERT algorithm marks a significant milestone in the realm of search engine optimization, dispelling common misconceptions and reshaping strategies for content creation and website optimization. As we navigate the landscape of language understanding in search, it is crucial to recognize BERT’s emphasis on context, user intent, and content relevance. The algorithm encourages a departure from traditional keyword-centric approaches, urging website operators and SEO practitioners to prioritize quality content that addresses the intricacies of user queries. Contrary to myths surrounding penalties and content length biases, BERT operates as a tool for refining language comprehension, ultimately enhancing the user experience by delivering more contextually relevant search results. By understanding and embracing the nuances of BERT, website operators can adapt their strategies to align with the evolving dynamics of search algorithms, fostering a more intuitive and user-centric online environment.